Vulnerabilities, on their own, don’t mean much. You could be staring at thousands of scanner alerts every week, but unless you know which ones truly matter, you’re just reacting to noise. The modern security challenge isn’t about detection anymore, it’s about decision-making. And that’s where vulnerability prioritization comes in.

One security lead we spoke to put it succinctly:

“We get everything, CVSS scores, exploit flags, scanner outputs. But none of it helps us decide what to fix first.”

That frustration reflects a reality many teams face today: an overwhelming volume of data but no clear prioritization strategy.

Vulnerability prioritization is more than just scoring threats. It’s about creating a workflow that filters critical, exploitable, and business-relevant risks from the noise. When you treat every vulnerability as equal, you spread your defenses too thin. But when you prioritize with context, based on exploitability, asset exposure, and potential impact, you shift from reaction to strategy.

This blog is your guide to modern vulnerability prioritization. From the evolution of CVSS to the role of exploit intelligence, from cloud-native realities to predictive scoring systems like EPSS, we’ll break down the frameworks and decisions that matter most right now.

What is Vulnerability Prioritization?

Vulnerability prioritization is the process of deciding which security issues deserve attention first based on their potential risk to an organization. While identifying vulnerabilities is relatively straightforward today with automated scanners and threat feeds, the real challenge lies in determining which ones actually matter.

Not all vulnerabilities are created equal. Some may exist in isolated environments with no access to critical assets. Others may be actively exploited in the wild and affect public-facing systems. A flat view that treats all threats equally leads to wasted time, misallocated resources, and exposure to avoidable breaches.

The goal of vulnerability prioritization is to move away from treating every alert as an emergency and instead apply logic, context, and intelligence to remediation decisions. It enables security teams to focus on what matters most rather than what looks most severe on paper.

When done well, vulnerability prioritization improves operational efficiency, reduces risk exposure, and aligns security activity with real business impact.

Why Vulnerability Prioritization is Broken in Most Organizations?

In many organizations, vulnerability prioritization still relies on outdated approaches and surface-level indicators like CVSS scores. The result is a backlog of alerts marked “critical” without any understanding of their relevance or exploitability.

The Problem With Alert Overload

Security teams often face tens of thousands of vulnerabilities across their environments. Most come from automated tools that prioritize volume over clarity. Without triage mechanisms in place, teams are left either chasing low-impact issues or ignoring real threats due to alert fatigue.

This creates a false sense of urgency, where every scan triggers dozens of high-severity tickets but very few actionable insights.

Why CVSS Alone Does Not Work?

CVSS was designed to standardize the measurement of vulnerability severity. But severity is not the same as risk. CVSS does not account for exploit trends, asset value, or environmental exposure. It rates theoretical danger without measuring real-world likelihood.

For example

- A vulnerability with a 9.8 score may affect an offline internal application

- Another with a lower score may be exploited in current malware campaigns and reside on a public server

Prioritizing based solely on CVSS can lead to critical flaws being overlooked and time wasted on patching low-risk systems.

Context Is the Missing Ingredient

Real prioritization depends on context. This includes

- Exploit intelligence

Knowing whether a vulnerability is actively targeted or used in threat campaigns - Asset sensitivity

Understanding what the vulnerability touches and how important it is to business functions - Exposure level

Identifying whether the vulnerability is reachable and if any mitigating controls are in place

These factors are far more predictive of risk than a static score. Yet many security tools and processes fail to include them, defaulting to outdated models.

The Rise of KEV and EPSS

To address these gaps, modern teams are adopting new frameworks

- KEV Known Exploited Vulnerabilities

A list curated by CISA that includes vulnerabilities with confirmed exploitation in the wild. These are rare but extremely dangerous and should be prioritized immediately. - EPSS Exploit Prediction Scoring System

A machine learning model that estimates the probability of a vulnerability being exploited in the next 30 days. It brings dynamic scoring into triage decisions.

When used together with asset context and internal threat models, KEV and EPSS offer a more practical lens on risk. Studies show that using these models can reduce patching effort dramatically while improving actual security outcomes.

What Holds Teams Back?

Despite their value, KEV and EPSS are not widely adopted. Many organizations still rely on default settings in their tools, which are often tied to CVSS thresholds. Prioritization logic is rarely customized, and security teams may not have the capacity or confidence to adjust scoring models.

But without change, the result is the same

- Thousands of open vulnerabilities

- Endless cycles of reactive patching

- Little impact on actual attack surface

Modern vulnerability prioritization is not about patching faster. It is about patching smarter. And that begins with understanding that not all vulnerabilities deserve the same attention.

Exploit Intelligence and Threat Contextualization

No matter how advanced your vulnerability detection systems are, their value is limited without understanding whether the issues they report are being actively targeted. Exploit intelligence brings real-world awareness into vulnerability prioritization. It helps security teams stop asking only “What is the severity of this vulnerability?” and start asking “Is someone actually trying to use this against us?”

Prioritizing based on severity scores or static presence alone results in unnecessary effort and overlooked threats. Threat contextualization ensures that vulnerability management is aligned with the actual tactics and behaviors of attackers.

The Gap Between Theoretical and Active Threats

Many vulnerabilities are technically severe but remain unexploited. Others may have lower severity scores yet become preferred entry points in widespread campaigns. This is the critical gap exploit intelligence helps bridge.

Consider these examples

- A CVE flagged with a 9.8 severity but no known exploit in the wild

- A CVE with a 7.2 score that is part of an active phishing-to-ransomware chain used by multiple threat groups

Without threat context, both vulnerabilities may be treated as equal or prioritized incorrectly. That misalignment leaves organizations vulnerable to real attacks while wasting time on hypotheticals.

What Exploit Intelligence Includes

Effective exploit intelligence consists of curated and validated data that tracks how vulnerabilities are being weaponized. Key elements include

- Active exploitations

Verified reports of CVEs being used in malware campaigns, ransomware drops, or remote access tools - Threat actor behavior

Intelligence on which attacker groups are leveraging specific vulnerabilities, including their targets and objectives - Exploit availability

Whether working exploit code exists publicly or in underground communities, increasing the risk of widespread abuse - Tactical alignment

How a vulnerability fits into known tactics, techniques, and procedures used by adversaries

Exploit intelligence does not just inform prioritization. It can also shape defensive strategies, patch urgency, and detection engineering.

The Role of Known Exploited Vulnerabilities

The Known Exploited Vulnerabilities list is maintained by cybersecurity authorities to highlight CVEs that are confirmed to be actively exploited. This list is not theoretical or predictive. It is a snapshot of real-time threat activity.

By focusing on these vulnerabilities first, teams can significantly reduce risk using a relatively small amount of effort. In many environments, less than one percent of total vulnerabilities account for the majority of breach activity. These are the vulnerabilities threat actors are using. They deserve attention regardless of their CVSS score.

This targeted approach results in

- Higher patching efficiency

- Reduced time spent on non-actionable CVEs

- Better alignment with threat reality

Adding Predictive Power With EPSS

While exploit intelligence provides confirmed insights, predictive scoring offers a forecast of future risk. The Exploit Prediction Scoring System uses real-world indicators such as exploit code availability, CVE metadata, and publication patterns to estimate the likelihood of exploitation in the near future.

Unlike static scores that remain unchanged, EPSS scores are updated regularly. This gives teams a way to anticipate shifts in attacker behavior and prioritize vulnerabilities before they become high-profile targets.

By layering EPSS with KEV and contextual asset data, organizations can

- Catch emerging threats before they escalate

- Reduce patching volume while improving coverage

- Adapt more quickly to new vulnerabilities with high exploitation potential

Threat Context Makes Prioritization Dynamic

Without threat intelligence, prioritization is reactive and outdated. By the time a vulnerability becomes a headline, exploitation is already underway. With context-driven models, teams can transition to a more proactive posture.

That shift requires

- Integration between vulnerability scanners and threat intelligence feeds

- Internal threat modeling that reflects business-specific exposure

- Continuous updates to prioritization logic based on external attack signals

For example

A vulnerability affecting a common framework used in your customer-facing application is now circulating in underground exploit kits. Even if your scanner shows a moderate score, that signal should elevate the issue in your prioritization model. This is how vulnerability prioritization transforms from a checkbox activity into a live threat mitigation function.

Avoiding the Pitfalls of Overreliance

It is important to recognize that not all threat intelligence is equal. Challenges include

- Incomplete or outdated feeds

- Misinterpreted indicators leading to false urgency

- Lack of business relevance in some high-profile exploits

Contextualization is not about acting on every alert. It is about aligning action with the likelihood and consequence of exploitation in your specific environment.

The key is balance. Use exploit intelligence to validate threats, EPSS to predict movement, and business context to map everything to your operational reality.

This multi-layered approach gives security teams the clarity they need to act with precision, urgency, and confidence.

Four Generations of Vulnerability Prioritization

The way security teams prioritize vulnerabilities has gone through a dramatic evolution over the past two decades. Each generation of vulnerability prioritization reflects a response to changing threat environments, tool capabilities, and organizational needs. Understanding this evolution is essential to knowing where your current strategy stands, and where it must go next.

The way security teams prioritize vulnerabilities has gone through a dramatic evolution over the past two decades. Each generation of vulnerability prioritization reflects a response to changing threat environments, tool capabilities, and organizational needs. Understanding this evolution is essential to knowing where your current strategy stands, and where it must go next.

Many organizations today still operate using outdated first or second-generation methods, unaware that more effective models exist. This section walks through the four generations of vulnerability prioritization and what defines each stage.

Severity-Only Prioritization

The first generation was built around a simple premise. The higher the severity the higher the risk.

This model depends heavily on CVSS scores. A vulnerability with a score above 9.0 is considered critical and should be patched immediately. Anything below 4.0 is generally treated as a low priority.

In the early days of vulnerability management this was sufficient. Security teams were smaller, infrastructures were simpler, and there were fewer known vulnerabilities. But as enterprise environments became more complex and interconnected, the limitations of this model became clear.

What it missed

- No awareness of whether a vulnerability is being exploited

- No understanding of where the vulnerability exists in the environment

- No differentiation between high-severity but low-impact issues and vice versa

This created inefficiencies and left organizations exposed to real threats that did not rank high on severity scales.

Risk-Based with Asset Context

The second generation introduced a major improvement. Factoring in the value and exposure of affected assets.

In this model, teams started asking questions like

- Is this vulnerability on a public-facing server

- Does it affect a critical business application

- What is the operational impact if it is exploited

Tools and workflows began integrating asset data such as system classification, data sensitivity, and business function. This allowed security teams to refine prioritization beyond pure CVSS.

For example, a 7.5 CVSS vulnerability on a mission-critical production server might be patched before a 9.8 CVSS issue on a test system behind multiple layers of protection.

Second-generation models significantly improved prioritization outcomes but still had blind spots, particularly around exploitability and threat intelligence.

Threat-Informed Prioritization

The third generation came with the realization that a vulnerability’s importance is tightly linked to whether it is being used by real attackers.

This stage introduced two core elements into prioritization

- Exploit intelligence

Integrating data from external feeds to see which vulnerabilities are actively being exploited - Known Exploited Vulnerabilities KEV

Leveraging government or industry-curated lists like CISA’s KEV catalog to identify CVEs that have proven weaponization

This model brought a more threat-informed approach to vulnerability management. It enabled teams to respond to live attacker behaviors rather than theoretical risks.

Tools in this generation started connecting to threat intelligence platforms, aggregating indicators of compromise, and tagging vulnerabilities based on active campaigns.

However, challenges remained

- Intelligence quality varied across sources

- Integration was often manual and inconsistent

- Prioritization still lacked predictive capability

That limitation set the stage for the next evolution.

Predictive and Automated Models

The fourth and most advanced generation represents a shift from static and reactive models to dynamic and predictive ones.

Here, prioritization is powered by real-time data and automation. The most prominent development in this generation is the Exploit Prediction Scoring System EPSS, which forecasts the likelihood of exploitation within the next 30 days using statistical and machine learning models.

Fourth-generation prioritization includes

- Integration of CVSS KEV and EPSS into a unified decision model

- Scoring models that update daily based on changes in threat behavior

- Automation of remediation workflows based on exploit probability

- Use of attack path modeling and runtime insights from tools like Sysdig or Wiz

Organizations adopting this approach are seeing measurable results

- Reduced patch volume by over 80 percent

- Increased remediation accuracy by targeting truly dangerous vulnerabilities

- Better alignment between vulnerability management and business risk

This generation marks the transition from security operations as alert processors to security as risk-informed decision engines.

What This Means for Your Strategy?

Knowing which generation your current process aligns with can help you identify the next leap forward.

- If your teams are still using severity alone you are in generation one

- If asset context is included but not threat data you are in generation two

- If you are using threat intelligence but no prediction modeling you are in generation three

- If your prioritization is informed by exploit probability automation and runtime data you are in generation four

The future of vulnerability prioritization belongs to those who can reduce noise with precision, predict where real danger lies, and act before attackers do.

Cloud Native and Runtime Based Prioritization

As organizations migrate to the cloud and embrace containerization, the nature of risk and how we prioritize it changes. Traditional vulnerability management tools were designed for static infrastructure and predictable environments. But cloud-native systems are dynamic, ephemeral, and deeply interconnected. That complexity demands a new layer of prioritization thinking.

Vulnerability prioritization in the cloud is not just about what exists in your environment. It is about what is actually in use, what is exposed in real time, and what can be exploited based on active runtime conditions.

The Shift From Static to Runtime Visibility

In traditional infrastructure, a server might run the same applications for years. Once a vulnerability is detected, it can be analyzed and patched in a relatively stable environment. In cloud-native architecture, workloads come and go. Containers spin up and shut down automatically. Microservices are deployed in seconds and updated continuously through CI CD pipelines.

This makes static scanning far less useful. A container image might contain a vulnerable package but if that package is never executed at runtime, does it actually pose a risk? Likewise, a vulnerability on a container that existed for three minutes in a staging environment may be flagged but never exploitable.

Runtime-based prioritization addresses these nuances by focusing on

- Which components are actually active

- Whether the vulnerable code is being executed

- If the system is reachable from external networks

In Use Is the New Critical

The concept of in use vulnerability detection focuses on scanning not just the image or artifact but the actual runtime behavior of containers or cloud workloads.

For example

- A vulnerability in a library that is installed but never called is deprioritized

- A vulnerability in a package loaded in memory during execution is prioritized

- A vulnerability in a service exposed to the internet receives higher urgency

This approach narrows the remediation scope and focuses engineering effort where it counts. Rather than generating alerts for every theoretical flaw in every container layer, runtime analysis filters the noise and highlights real exposure.

Exposure Context in Cloud Environments

Cloud services operate with shared responsibility and layered architecture. This creates more moving parts that affect exposure. Prioritization in this landscape must consider

- Network accessibility

Is the vulnerable service exposed via public endpoints like APIs or load balancers - Identity and permissions

Does the affected service have access to sensitive data or production systems through IAM roles - Inter-service communication

Can a vulnerability in one container be used to pivot laterally to another more critical container - Ephemeral lifecycle

How long does the affected workload exist

Is it part of a CI CD workflow that will redeploy vulnerable images repeatedly

All of these factors add critical context. A CVE in a non-public internal service that is up for ten seconds and never receives external traffic is not the same as one affecting a production API gateway.

Prioritization Must Follow the DevOps Rhythm

Cloud-native organizations often use DevOps or DevSecOps practices. Code is deployed frequently and security controls must operate within that fast-paced cycle. This has two implications for vulnerability prioritization

- Shifting left is not enough

Catching vulnerabilities during development is useful but insufficient. What matters is whether those vulnerabilities make it into production and are reachable. Runtime data bridges that gap. - Automation must be intelligent

Auto-creating tickets for every vulnerability in every build overwhelms development teams. Intelligent automation filters issues based on actual risk. It prevents alert fatigue and builds trust with engineering.

Prioritization models need to plug into the same tools that developers use such as GitHub GitLab Jenkins or ArgoCD. The decisions made by security must be fast, actionable, and rooted in the reality of how systems behave in production.

The Role of Cloud Native Security Platforms

Modern prioritization in cloud environments often leverages two categories of tools

- Cloud Security Posture Management

CSPM Provides visibility into misconfigurations and policy violations in cloud setups. Useful for identifying issues like open storage buckets or overly permissive IAM roles. - Cloud Workload Protection Platforms

CWPP Offers deep insights into workload behavior including runtime analysis, vulnerability management, and compliance. These platforms help consolidate runtime insights with risk signals.

When these tools integrate with vulnerability data and exploit intelligence they create a more mature prioritization model. Not only do they help detect vulnerabilities they allow teams to understand which ones are actionable and urgent based on live system behavior.

From Detection to Real Time Risk Reduction

In the cloud, the old approach of scan patch repeat no longer works. Vulnerabilities need to be prioritized not just by static presence but by runtime relevance and real time exposure. That means

- Identifying whether vulnerable code is loaded and running

- Understanding how and where the affected service is reachable

- Knowing whether the vulnerability enables an attacker to move laterally or escalate privileges

- Automating decisions that align with system behavior rather than tool defaults

The goal is no longer just visibility. It is real time risk reduction. And that is only possible when vulnerability prioritization evolves to meet the pace and complexity of modern cloud-native systems.

Regulatory and Compliance Drivers

Modern vulnerability management is no longer just about defending against threats. It is also about meeting the growing expectations of regulators, auditors, and legal frameworks. As cybersecurity incidents continue to make headlines, regulatory bodies are increasing pressure on organizations to show not only that they identify vulnerabilities, but also that they prioritize and remediate them in a structured and timely manner.

Vulnerability prioritization plays a critical role in achieving compliance. It connects the technical realities of patch management with the accountability demands of legal, financial, and governance frameworks.

Compliance Is Shifting From Reactive to Proactive

In the past, many compliance programs simply required that vulnerabilities be identified and patched within defined time windows based on severity. Today, those models are no longer sufficient.

Regulators now expect organizations to

- Identify which vulnerabilities truly impact critical systems

- Demonstrate the reasoning behind patching priorities

- Show that exploitability and risk context are part of decision-making

- Maintain audit trails of triage and remediation activity

This evolution reflects the broader move toward risk-based compliance, where actions must be justifiable based on real-world threat scenarios rather than just technical severity.

CISA and Mandatory Remediation of Known Exploits

One of the most significant shifts in regulatory enforcement comes from mandates like those issued by the Cybersecurity and Infrastructure Security Agency.

Organizations, particularly those in critical infrastructure sectors, are now required to patch vulnerabilities from the Known Exploited Vulnerabilities list within strict timeframes.

This list is not optional guidance. It is a compliance obligation with real consequences for failure. Missing a KEV deadline can result in

- Formal regulatory penalties

- Increased liability in the event of a breach

- Negative audit outcomes and reputational damage

This mandate reinforces the idea that not all vulnerabilities are equal and that prioritization must reflect threat reality.

International Frameworks Emphasize Risk Awareness

Beyond local mandates, international security frameworks are also adopting more nuanced expectations around vulnerability management.

For example

- ISO 27001 requires organizations to assess and treat information security risks methodically, including timely identification and resolution of vulnerabilities

- PCI DSS includes requirements for patching and vulnerability handling, especially in systems that store or process payment information

- HIPAA expects covered entities to identify technical safeguards and address system flaws that could lead to exposure of patient data

- GDPR implies a duty of care in protecting personal data, including ensuring systems are hardened against known threats

Each of these standards does not just ask if you patched a vulnerability. They ask whether your organization can demonstrate that it is prioritizing based on risk, business impact, and exploitation likelihood.

Vulnerability Prioritization Helps Satisfy Control Objectives

When audit season arrives, being able to show structured, context-driven vulnerability prioritization helps satisfy multiple control objectives, including

- Risk identification and treatment

- Response and remediation timelines

- Evidence of continuous monitoring

- Justification of exceptions or delayed patches

- Integration with incident response planning

It also provides cover for when decisions are challenged. If a patch is delayed, but documented evidence shows the vulnerability was not actively exploited, not exposed, and protected by compensating controls, that decision becomes easier to defend.

The Cost of Non-Compliance

Failing to prioritize vulnerabilities properly can lead to much more than a security incident. The business impact includes

- Regulatory fines for breach of compliance requirements

- Lawsuits or civil liability from affected users or customers

- Insurance complications where policy coverage depends on due diligence

- Brand damage from public disclosure of avoidable incidents

These risks highlight why vulnerability management must go beyond ticket resolution. It must tie into enterprise risk management and compliance oversight at every level.

Building Compliance into Prioritization Workflows

Rather than treating compliance as a parallel effort, security teams can embed it into their existing workflows by

- Mapping regulatory controls to vulnerability types and asset classes

- Including KEV tracking as a mandatory input into triage decisions

- Automating reporting of remediation timelines and justification notes

- Coordinating with legal and compliance teams during risk acceptance reviews

This integrated approach reduces friction across departments and positions security as a partner in organizational governance.

From Security Responsibility to Regulatory Accountability

In the eyes of regulators, security decisions are no longer just technical judgments. They are business decisions with regulatory consequences. Vulnerability prioritization becomes a mechanism not only for defense but also for demonstrating accountability.

Organizations that prioritize with context, document their decisions, and align remediation with known risk indicators are in a stronger position to meet compliance standards and defend those choices if questioned.

This is how prioritization protects not just infrastructure but also reputational and legal standing.

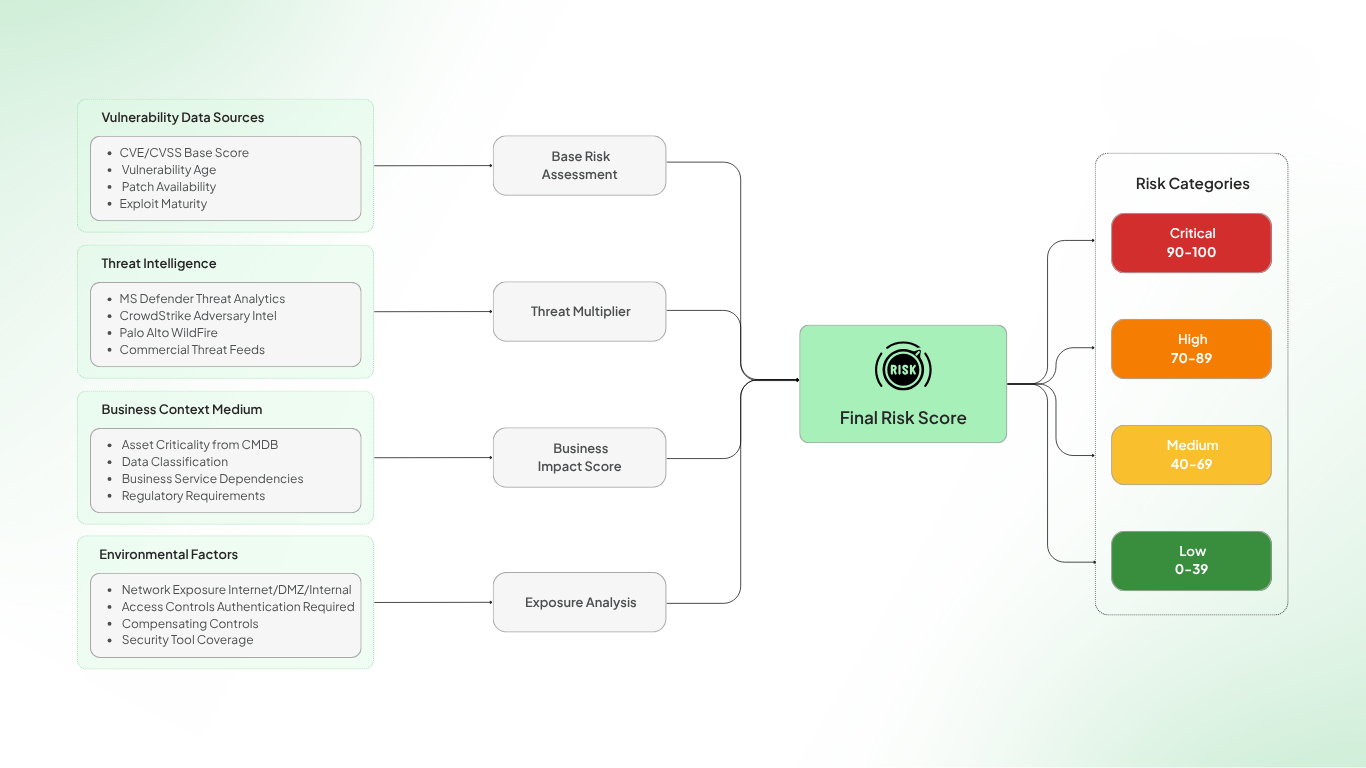

The Tools and Templates Behind Real-World Prioritization

Vulnerability prioritization is not just a strategy. It is a system. That system becomes more effective when supported by structured frameworks, visual tools, and repeatable logic. These elements help teams move from subjective decision-making to consistent and scalable risk reduction.

Whether you are working within a small security team or managing a multi-region enterprise, having the right models makes prioritization faster, clearer, and easier to justify.

The Role of Frameworks in Decision-Making

A prioritization framework provides structure to an otherwise chaotic process. Instead of evaluating vulnerabilities on a case-by-case basis with shifting criteria, frameworks allow teams to apply consistent standards across systems and timeframes.

A solid framework answers questions such as

- What factors influence urgency

- How are vulnerabilities scored relative to one another

- When should a patch be deployed and when is mitigation enough

These frameworks typically draw from multiple sources of data including

- Technical severity scores

- Exploit intelligence feeds

- Asset classification

- Business impact modeling

- Compliance relevance

By combining these inputs, organizations create a more comprehensive view of risk and allocate attention accordingly.

Designing a Risk-Based Prioritization Matrix

One of the most widely used tools in vulnerability management is the risk matrix. This visual model maps different variables against each other to determine priority levels.

A basic matrix might have

- One axis for likelihood of exploitation, drawn from KEV, EPSS, and threat intelligence

- One axis for impact, including asset criticality, data sensitivity, and operational role

Here is a simple example of what this could look like

| Likelihood of Exploitation | Low Impact | Medium Impact | High Impact |

| Low | Ignore | Monitor | Review |

| Medium | Monitor | Review | Prioritize |

| High | Review | Prioritize | Immediate Fix |

This matrix helps standardize triage decisions across teams, providing clear guidance based on measurable factors rather than subjective instincts.

Triage Flowcharts for Fast Decision‑Making

In fast-paced environments, flowcharts offer a streamlined way to move from detection to decision. A well-designed flowchart can answer

- Is this vulnerability affecting production

- Is it externally reachable

- Is there a known exploit

- Does it impact regulated or sensitive data

Each yes or no directs the flow toward remediation, monitoring, or deferral.

Example flow

1. Is the vulnerability on a public-facing system

- Yes → Check for known exploit

- No → Check asset criticality

2. Is there an exploit available or in active use

- Yes → Immediate action

- No → Check runtime exposure

3. Is the code executed in production

- Yes → Schedule fix

- No → Defer with monitoring

Flowcharts like these reduce friction, improve response times, and remove ambiguity from triage.

Customizable Templates for Teams

Standard templates allow organizations to scale their prioritization logic across teams and environments. Templates might include

- Risk scoring sheets that assign numeric weights to different variables

- Prioritization heatmaps for various business units

- SLA tables for remediation deadlines based on priority tiers

- Justification logs for delayed patches or accepted risks

These templates become especially useful for

- Reporting to auditors

- Coordinating with IT operations

- Aligning with cross-functional stakeholders in compliance or legal

When combined with tools and automation, templates can drive ticket creation, task assignment, and KPI dashboards tracking prioritization effectiveness.

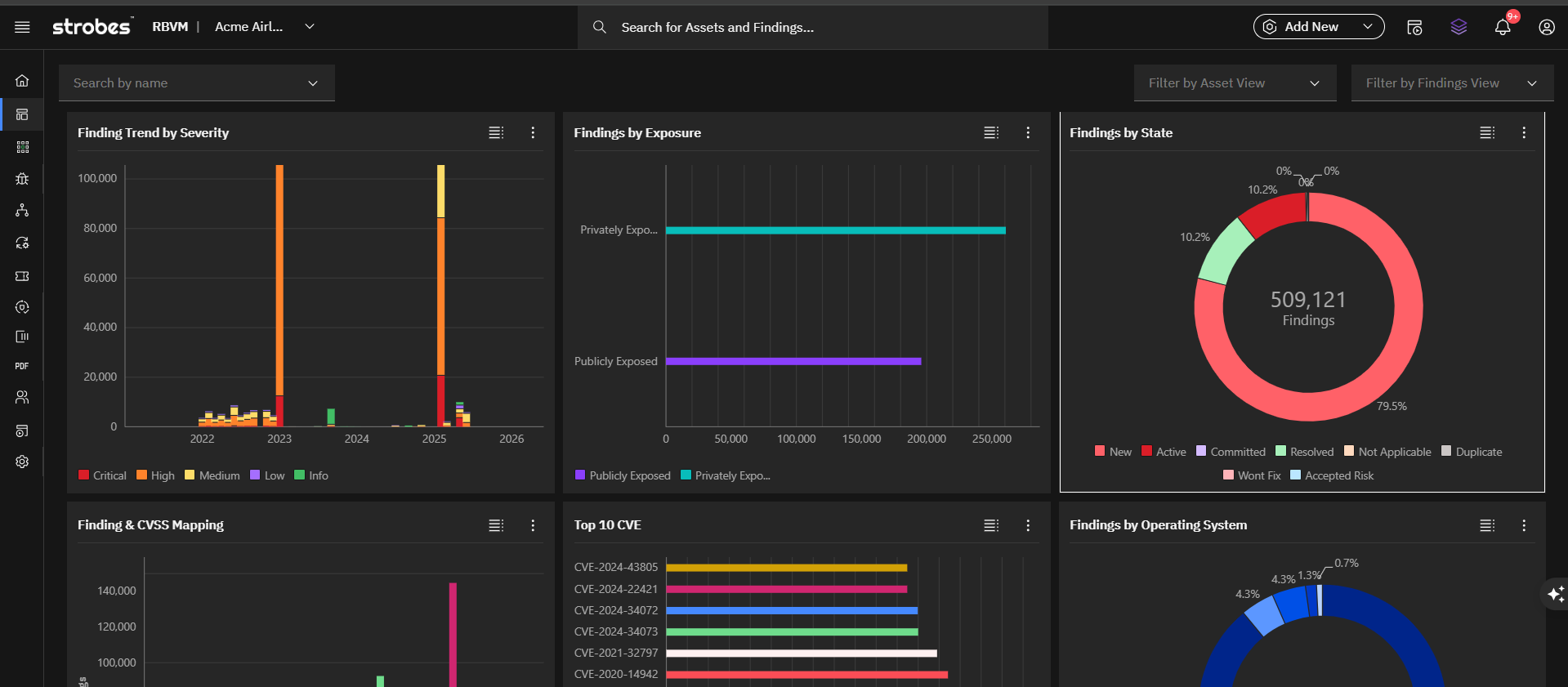

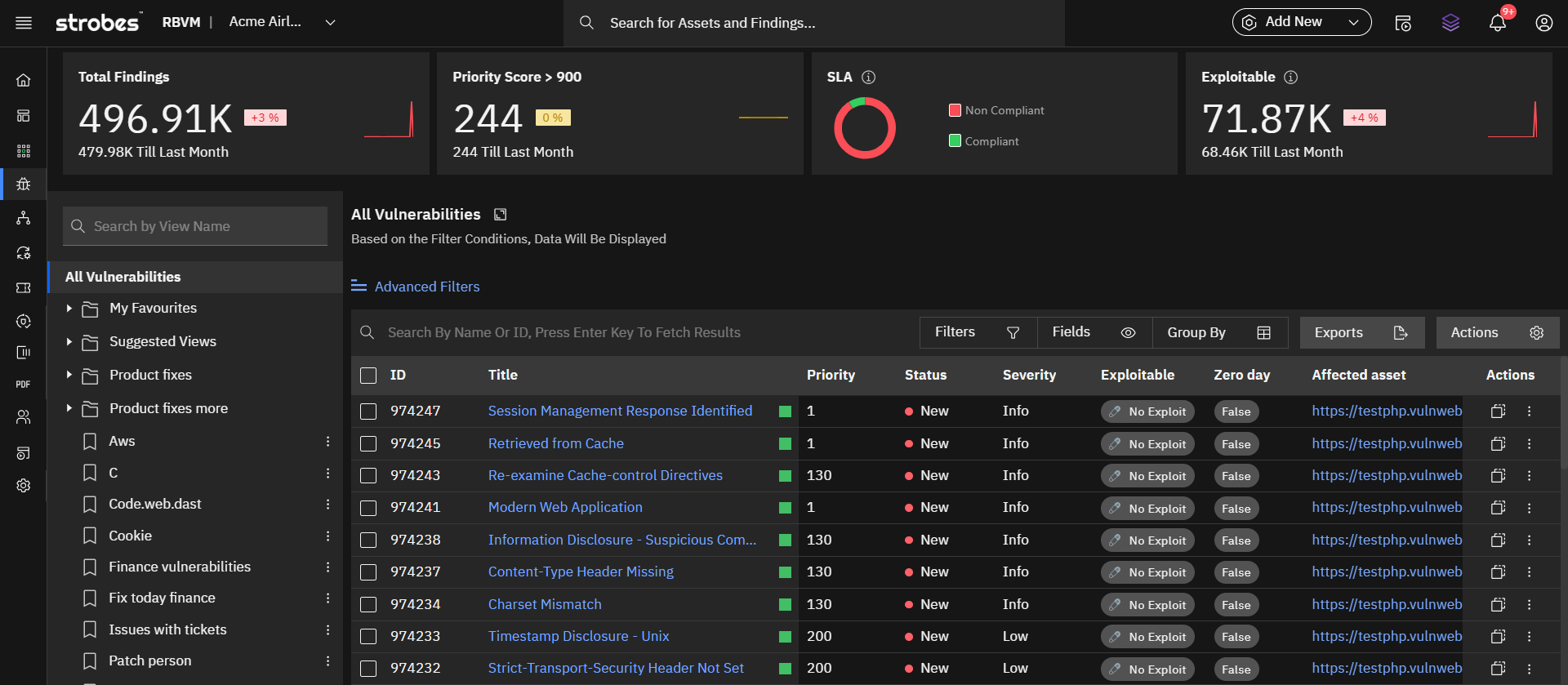

For instance, some platforms now offer ready-made dashboards and prioritization scores that surface risk‑ranked vulnerabilities along with business‑oriented metrics

Visual Tools That Communicate Clearly

Visuals help turn complex data into actionable decisions. Security teams often deal with large datasets and fast-changing conditions. Clear visuals bring order and help explain prioritization logic to both technical and non-technical audiences.

Useful visualizations include

- Vulnerability maps showing clusters of unpatched risks across environments

- Exposure trees that trace how an exploited vulnerability affects key assets

- Remediation timelines that show trends in time to fix

- Heatmaps comparing vulnerability count and exploitability over time

These visuals not only support internal decision-making but also improve transparency across departments and leadership.

Bringing It All Together

A good vulnerability prioritization system is not a rigid checklist. It is a living infrastructure that integrates models, people, workflows, and data. The goal is to make prioritization

- Repeatable across environments

- Transparent to stakeholders

- Adaptable as threats evolve

- Accountable through documentation and audit trails

The combination of matrices, flowcharts, templates, and visuals helps operationalize vulnerability prioritization in a way that is scalable and defensible. It transforms abstract security principles into concrete, actionable practice.

By building these tools into your workflow, you are not just improving response times, you are institutionalizing better decisions and building resilience into your security program.

Tool Ecosystem Comparison and Practical Approaches

Every organization managing vulnerabilities at scale eventually turns to tools for help. But not all tools are built with prioritization in mind. Many simply catalog issues based on severity and volume, lacking the intelligence or flexibility needed to separate noise from actual risk.

Effective vulnerability prioritization requires tools that do more than scan. They must support decision-making, integrate with existing workflows, and reflect the dynamic nature of threats. Choosing the right tool, or combination of tools, is less about brand and more about functionality.

What the Right Tools Should Offer

Before evaluating products, it helps to define what effective vulnerability prioritization tools should do. At a minimum, they should support

- Exploit intelligence integration

Tools must include or connect to threat feeds and exploit prediction systems such as EPSS or curated exploit databases - Asset and business context

They should allow mapping vulnerabilities to the criticality and sensitivity of affected systems - Custom prioritization logic

Teams should be able to adjust scoring rules and decision flows based on their environment - Automation capabilities

This includes auto-triage of alerts, ticket creation, remediation suggestions, and workflow orchestration - Real-time data correlation

Tools must track live exposure, not just static vulnerability presence. This is especially important in cloud and containerized environments - Support for exception handling

Ability to document and manage deferred fixes, compensating controls, and risk acceptance decisions

Without these features, tools simply surface problems. They do not help teams act on what matters most.

Categories of Tools That Support Prioritization

Instead of listing vendors, it is more useful to look at categories and their respective strengths when applied to prioritization.

- Vulnerability management platforms

These focus on discovery and reporting. The most advanced versions now incorporate exploit intelligence, asset context, and dynamic scoring - Threat intelligence aggregators

These tools gather real-time attack data and match it against your environment. When combined with scanner output, they improve prioritization accuracy - Cloud-native security platforms

Offer runtime insights, CI CD integration, and container-level risk analysis. Particularly useful for in-use prioritization and modern infrastructure - Risk quantification and exposure analysis tools

Help translate vulnerabilities into business metrics such as financial risk, exposure duration, and breach impact probability - Security orchestration and automation platforms

These streamline workflows by triggering automated responses to high-risk vulnerabilities. Also useful for integrating prioritization decisions into ticketing systems and change management

Each of these tool types plays a different role in a complete prioritization strategy. The key is integration, not isolation. The more connected your data and processes are, the more accurate and efficient your prioritization becomes.

Avoid Static Dashboards That Rely Solely on CVSS

Not all platforms are built with effective prioritization in mind. Many still rely on static dashboards that sort vulnerabilities only by CVSS score and offer limited flexibility. These approaches often overlook exploitability, asset importance, and operational context. As a result, they can lead to generic remediation efforts that do not reflect actual risk.

Modern solutions are designed to address this gap. Platforms that support continuous threat exposure management integrate threat intelligence, business context, and automation. They allow teams to create workflows based on real exposure and risk, not just technical severity. Strobes is an example of such a platform, enabling security teams to customize prioritization logic, automate triage, and align remediation with real-world threats and organizational priorities.

Building a Practical Prioritization Workflow

A strong prioritization workflow supported by the right tools might look like this

- Vulnerabilities are ingested from multiple scanners across infrastructure, containers, and applications

- Threat intelligence feeds flag known exploited vulnerabilities and assign EPSS likelihoods

- Asset data links each finding to system importance and business function

- A rules engine scores and categorizes each vulnerability using customized weights

- Automation routes tickets to the right teams with recommended timelines

- Dashboards track time to remediate, exposure reduction, and exceptions

- Regular review cycles update thresholds and logic based on new threat trends

This flow connects detection with action and replaces patch everything models with intelligent triage based on real risk.

When Simplicity Is the Best Strategy

For smaller teams or organizations just starting to mature their vulnerability program, simplicity often outperforms complexity. A spreadsheet model using EPSS data, KEV tags, and business context can outperform an expensive tool that lacks strategic integration.

The key is not how advanced the tool is, but whether it helps you prioritize better. Prioritization is about clarity and control. Any tool that brings confusion, delays, or irrelevant alerts works against that mission.

Focus first on defining your prioritization logic. Then choose or customize tools that enable that logic to scale across your environment.

Real World Case Studies and Data Driven Outcomes

The real test of any vulnerability prioritization strategy is not in theory but in practice. It is one thing to build a model and another to prove that it reduces risk, saves time, and aligns security with business outcomes. Case studies and measurable data provide the evidence that prioritization done right can deliver meaningful results.

In this section, we look at real scenarios and performance metrics that showcase the tangible impact of contextual, risk-based vulnerability prioritization.

The Strobes CTEM Platform Case Study

One notable example involves a mid-sized e-commerce company that implemented Strobes’ Continuous Threat Exposure Management platform. Prior to Strobes, the security team faced overwhelming scanner noise, manual triage, and inconsistent patching timelines.

After deploying Strobes, the organization achieved

- 82 percent reduction in false positives

- 67 percent faster remediation time

- 70 percent decrease in manual triage effort

These outcomes were made possible by Strobes’ ability to integrate threat intelligence, correlate business context, and automate prioritization workflows. The team was able to focus only on what mattered and measure improvements in both operational and strategic terms.

Industry Benchmark Data Validating Prioritization Impact

Independent research reinforces that most vulnerabilities do not warrant immediate remediation. According to recent studies

- Only 6 percent of all known CVEs are ever exploited in the wild

- About 8 percent of software weaknesses are actively exploited during any given 30-day period

- Organizations using models that combine CVSS, KEV, and EPSS have seen up to 14 to 18 times more efficient triage workflows and a 95 percent reduction in patching workload compared to CVSS-only approaches

These statistics show that prioritization is not just about reducing alerts. It is about concentrating effort where it has the most impact.

Our Solution: Vulnerability Aggregation

Reducing Patch Waste and Improving Remediation Accuracy

Security teams that adopt contextual triage models consistently report

- A reduction of patching activity by over 80 percent without increasing exposure

- Improved SLA compliance for high-risk vulnerabilities

- Better alignment with engineering workflows and reduced alert fatigue

This shift allows teams to move away from volume-based patching to outcome-driven remediation.

Business Risk Alignment and Executive Clarity

Organizations that map vulnerabilities to business units and asset criticality report additional gains

- Security and IT teams spend less time negotiating over patch urgency

- Business units gain clarity on how technical risk translates to financial and reputational exposure

- Executives receive clearer reporting on what is being done, why it matters, and how it reduces enterprise risk

These real-world outcomes demonstrate that vulnerability prioritization, when executed well, is not just a technical enhancement. It becomes a business advantage.

Step by Step Implementation Roadmap

Building an effective vulnerability prioritization program does not require a massive overhaul. What it needs is a structured rollout guided by clear logic, measurable goals, and scalable workflows. Whether you are starting from scratch or refining an existing process, this roadmap outlines how to implement prioritization that aligns with threat reality and organizational priorities.

Step 1 Define Objectives and Scope

Start by clarifying what you are trying to achieve. Objectives may include

- Reducing time to remediate high-risk vulnerabilities

- Improving alignment between security and operations

- Meeting compliance mandates around known exploited vulnerabilities

- Reducing manual triage and alert fatigue

Determine where you will apply the prioritization process

- Will it be across all assets or start with cloud workloads

- Are you including containers, web applications, and third-party libraries

- Which business units or environments are in scope

Clarity here will focus your design decisions in the following steps.

Step 2 Map Your Assets and Business Context

Prioritization only works when it is grounded in asset context. You need to know what you are protecting and why it matters.

Actions include

- Create or enrich an asset inventory that includes classification, ownership, and exposure levels

- Identify critical applications, revenue-linked systems, and sensitive data repositories

- Tag assets based on environment type such as production, development, internal, external

This step ensures that your prioritization logic accounts for real business impact.

Step 3 Integrate Threat Intelligence and Scoring Models

Prioritization should not rely on CVSS alone. Introduce additional layers of threat awareness by

- Integrating KEV data to highlight vulnerabilities with known exploits

- Applying EPSS scoring to estimate near-term exploitation likelihood

- Feeding in vendor-specific threat intelligence or open-source feeds where relevant

Make sure your tools and workflows can correlate this data with scanner output. This fusion is what turns static detection into contextual triage.

Step 4 Build a Custom Prioritization Model

At this stage, define your scoring logic. This could include weighted variables such as

- Exploitability likelihood from EPSS

- Known exploitation from KEV

- Asset criticality score

- Exposure status such as public-facing or internal

- Business function impact

Design a rule-based or numerical scoring matrix and assign thresholds for categories such as

- Immediate remediation

- Scheduled remediation

- Monitor

- Accept or defer

This model can evolve, but you need a version one that your team can test and refine.

Step 5 Automate the Triage and Ticketing Process

Automation is essential to scale. Once your prioritization model is defined, implement it into your security operations and IT workflows.

Recommended actions

- Use your vulnerability management platform or SOAR system to assign priority tags based on your model

- Auto-create tickets for high-priority items with pre-set SLAs and routing rules

- Suppress or group low-priority vulnerabilities to reduce noise

- Integrate with development and cloud tools to embed prioritization into CI CD pipelines

This step helps your team move from reactive to proactive vulnerability response.

Step 6 Track Metrics and Improve Continuously

Measure the impact of your prioritization strategy using metrics such as

- Mean time to prioritize

- Mean time to remediate

- Number of KEV-tagged vulnerabilities closed within SLA

- Volume of alerts suppressed or deferred

- Reduction in patching workload per month

Use these insights to adjust thresholds, scoring weights, or asset classifications. Prioritization is not a one-time setup. It is a living system that should reflect current threats and business needs.

Step 7 Communicate Across Teams and Stakeholders

Effective prioritization cannot succeed in isolation. Involve your stakeholders by

- Educating engineering teams on how and why vulnerabilities are prioritized

- Providing dashboards or reports to executives showing progress and gaps

- Aligning security and compliance reporting to reflect prioritization outcomes

Clear communication ensures that prioritization becomes an organizational capability, not just a security task.

The Future of Vulnerability Prioritization

Vulnerability prioritization is evolving from a reactive practice to a predictive discipline. As threats grow more sophisticated and digital environments become increasingly dynamic, the future of prioritization will be defined by automation, contextual intelligence, and continuous learning.

Organizations that embrace these trends will not only patch faster—they will make better security decisions, reduce operational friction, and align more closely with business strategy.

Predictive and Probabilistic Models Will Lead

Static scoring systems are fading in relevance. In their place, machine learning models like EPSS offer probability-based scoring that evolves in real time. These models assess exploitability not by theoretical vectors but by

- Analyzing exploit code availability

- Observing attacker chatter in dark web forums

- Monitoring inclusion in toolkits and malware strains

The next generation of these systems will likely incorporate threat actor targeting preferences, geographic data, and exploit chaining behavior, offering even more precise predictions.

As predictive models mature, they will form the core of triage decisions, supplemented by contextual business data.

Continuous Threat Exposure Management Will Become Standard

CTEM frameworks represent the future of proactive vulnerability management. Rather than waiting for a quarterly scan or an incident response trigger, CTEM operates continuously, assessing

- What is exposed

- How it can be exploited

- What has changed since the last assessment

This real-time visibility enables organizations to adapt immediately to new risks and enforce prioritization policies with speed and accuracy. It transforms security from a reactive defender to a real-time risk assessor.

Runtime and Behavioral Data Will Drive Decisions

Traditional scans only tell part of the story. Runtime insights—what services are doing, how data is flowing, which code paths are active—offer a more grounded view of exploitability.

Future prioritization will increasingly rely on

- Runtime telemetry from containers and cloud workloads

- Real-time traffic analysis

- User behavior analytics and access path modeling

This data will help answer questions like

- Is the vulnerable code actually being executed

- Is the asset reachable under normal operating conditions

- Would an attacker need multiple stages to reach this vulnerability

These insights will sharpen prioritization and reduce alert fatigue.

Security Will Speak Business Language by Default

The future is not just about smarter models. It is also about better communication. Security teams will need to quantify risk in business terms, such as

- Potential financial impact

- Regulatory exposure

- Impact on customer-facing services or brand trust

Prioritization models will output not only technical scores but business-aligned risk categories. Dashboards will be designed for both engineers and executives. Reporting will focus on outcomes, not just actions.

This alignment will accelerate decision-making, clarify trade-offs, and increase investment in security initiatives.

Attack Path Mapping and Chaining Will Influence Prioritization

Attackers do not use CVSS scores. They use chains of weak points. Prioritization models of the future will incorporate attack path simulations, where

- One low-risk vulnerability leads to lateral movement

- Privilege escalation is possible through an unpatched internal service

- Data exfiltration depends on chaining multiple access points

By mapping how attackers move through systems, organizations can prioritize based on the entire path’s risk, not just isolated scores. This requires integrating vulnerability data with identity, network, and application telemetry.

AI Will Automate and Adapt Faster Than Humans

Artificial intelligence will play a major role in scaling prioritization decisions. AI can analyze huge volumes of data, spot trends, and update scoring rules without manual intervention.

Future systems will

- Learn from past remediation patterns

- Predict impact based on emerging CVEs and code similarity

- Automatically adjust risk thresholds based on business activity

This will reduce the need for constant human calibration and allow security teams to focus on higher-value strategic decisions.

Frequently Asked Questions

1. What is the difference between vulnerability prioritization and vulnerability management?

Vulnerability management is the broader process of identifying, assessing, and remediating vulnerabilities. Prioritization is the step that determines which vulnerabilities should be addressed first, based on risk, exploitability, and business context.

2. Can vulnerability prioritization be fully automated?

Not entirely. Automation can streamline triage using scoring models, exploit data, and rules, but business context and exception handling still require human oversight for accuracy and accountability.

3. How do you measure the effectiveness of a prioritization strategy?

Key metrics include mean time to prioritize (MTTP), patching coverage of known exploited vulnerabilities (KEVs), reduction in alert volume, and business-aligned risk reduction over time.

4. Why is CVSS not enough for prioritization?

CVSS is static and severity-focused. It does not reflect real-world exploitability, asset exposure, or operational relevance, which are critical for making smart triage decisions.

5. What are some common mistakes in prioritization workflows?

Relying on CVSS alone, ignoring business input, over-alerting without suppression, failing to loop in IT or DevOps, and neglecting runtime validation are frequent pitfalls.

6. How should prioritization align with CI/CD pipelines?

Prioritization should integrate with build systems and registries, flagging exploitable or high-risk vulnerabilities before deployment, and suppressing irrelevant ones based on context.

7. What if two tools give different prioritization scores?

Use scoring disagreement as a trigger to investigate. Combine scores with asset exposure and exploit data. Always anchor final triage in context, not just the number.

8. How can you visualize prioritization effectiveness?

Dashboards can track remediation time, exploit coverage, suppressed vulnerabilities, and high-risk asset exposure. Trend lines over time help prove value to leadership.

9. Can prioritization help in third-party risk management?

Yes. By correlating third-party software vulnerabilities with exploit data and usage context, organizations can more accurately assess vendor risk and enforce SLAs.

10. What does successful vulnerability prioritization look like?

It looks like fewer tickets, faster patching where it matters, better alignment with business priorities, and reduced exposure to exploited vulnerabilities—all with clear reporting and defensible decision-making.