Artificial Intelligence is no longer experimental. It’s running customer service, driving fraud detection, accelerating threat response, and influencing high-stakes decisions. According to a report, 78% of companies have adopted AI and 71% are actively using generative AI across their operations. Yet only 18% have a formal governance structure to manage how these systems are developed, deployed, and monitored.

That gap is already costing organizations. Nearly half have reported issues with their AI initiatives, from flawed outputs and privacy concerns to security exposure. The problem isn’t just adoption and outpacing policy. It’s AI being deployed in sensitive workflows without accountability, visibility, or control.

When AI touches regulated data, powers automated decisions, and integrates into core infrastructure, governance can’t be an afterthought. It needs to be built into how AI is selected, trained, deployed, and tested. The content ahead lays out a clear framework to make AI safer, more transparent, and aligned with enterprise risk goals, without slowing down innovation.

Why AI Governance Framework Matters?

AI systems are increasingly involved in:

- Automating threat detection and response

- Enhancing fraud prevention

- Accelerating vulnerability management

- Powering customer-facing chatbots and LLM-based tools

Without proper governance, AI systems can become:

- Opaque: Hard to audit or explain

- Biased: Trained on flawed or non-representative data

- Vulnerable: Susceptible to prompt injection, model theft, or misuse

AI use cases often touch sensitive data, influence customer experiences, or impact business operations. You must treat them with the same scrutiny as a critical application. These risks are especially concerning because AI use cases often intersect with sensitive data, influence key customer experiences, and drive business-critical decisions. As such, they must be governed and monitored with the same rigor as traditional critical applications.

Key Risks in AI Systems

- Model poisoning or adversarial attacks

- Prompt injection in LLMs

- Unauthorized model usage

- Shadow AI (unapproved tools)

- Data leakage and privacy violations

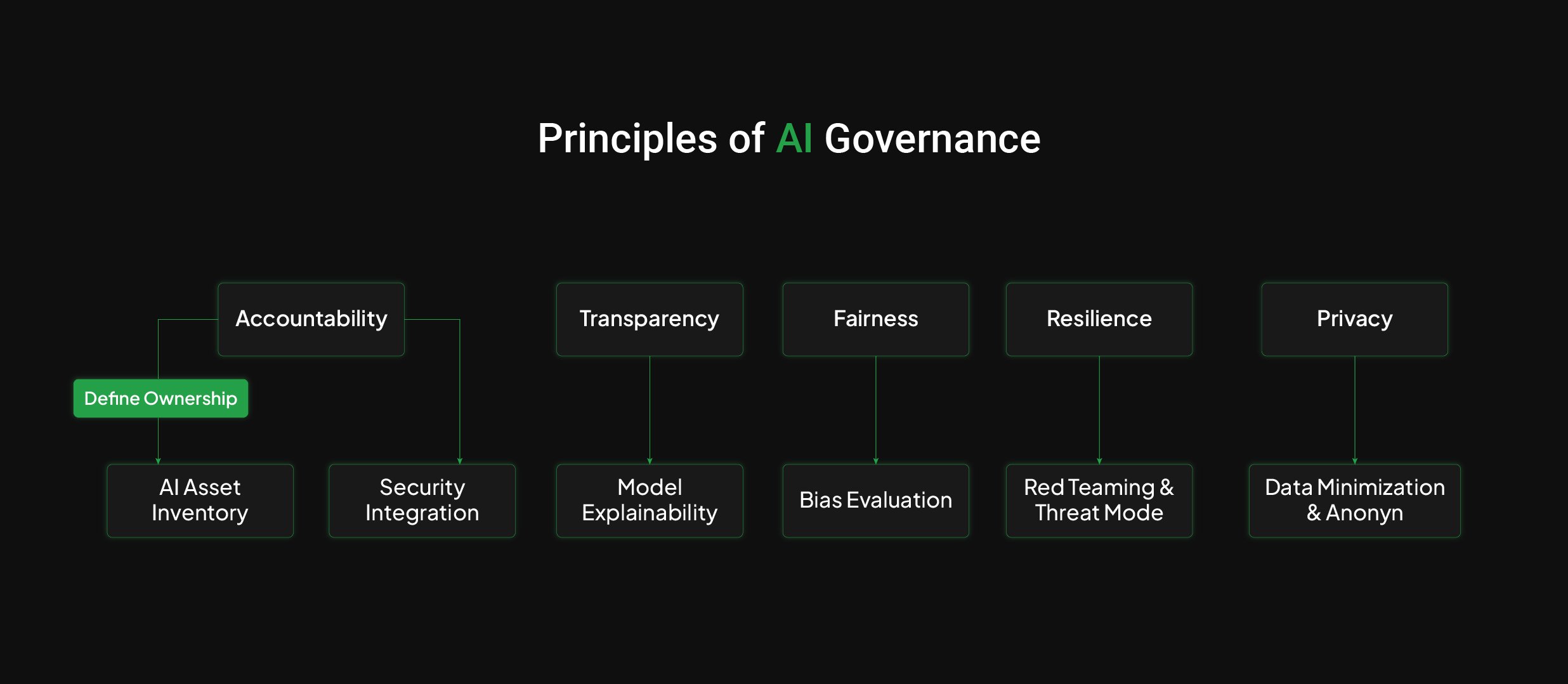

Principles of AI Governance Framework

Each principle should ensure that the organization’s use of AI is not only technologically sound but also aligns with applicable legal requirements, ethical standards, security best practices, and the broader expectations of the business. The following are some of the key principles to consider to implement a robust governance system over AI:

Accountability

- AI Asset Inventory: Maintain a comprehensive list of AI models, tools, and associated data assets across the organization.

- Security Integration: Ensure all AI systems are integrated into enterprise security processes (e.g., IAM, incident response).

- Goal: Assign clear ownership and embed AI into existing governance structures.

Transparency

- Model Explainability: Ensure models can be understood by stakeholders (e.g., how decisions are made, what features are used).

- Goal: Build trust and regulatory alignment through clear AI behavior.

Fairness

- Bias Evaluation: Assess models for demographic or systemic bias in predictions or outcomes.

- Goal: Reduce discrimination and ensure ethical AI deployment.

Resilience

- Red Teaming & Threat Modeling: Simulate attacks and identify vulnerabilities (e.g., adversarial inputs, data poisoning).

- Goal: Strengthen defenses against emerging AI threats.

Privacy

- Data Minimization & Anonymization: Limit personal data usage in training/inference and apply de-identification techniques.

- Goal: Ensure AI respects user privacy and complies with data protection laws (e.g., GDPR, HIPAA).

Core Principles of AI Governance | ||

Principle | Description | Implementation Strategy |

| Accountability | Who owns and approves AI decisions? | Maintain an AI asset inventory |

| Transparency | Can the system be explained? | Use explainable AI and model documentation |

| Fairness | Is the model equitable across groups? | Conduct regular bias evaluations |

| Resilience | Can it withstand abuse or attacks? | Perform red teaming , threat modeling & continoues threat intelligence |

| Privacy | Is user data protected and minimized? | Apply anonymization, masking, and logging |

The AI Governance Framework

A clear AI governance structure ensures alignment and oversight. This includes covering a broader spectrum in understanding the risks and their threat vectors to further determine & deploy appropriate controls. The following are certain security pillars to include in your AI governance strategies for developing a robust and flexible framework:

1. AI Risk Classification

Before applying security controls, it’s important to classify AI use cases by their risk level. Not all AI systems require the same level of protection—by understanding which projects are internal, external, or sensitive in nature, organizations can allocate resources more efficiently and apply stricter controls only where needed.

The following table outlines typical AI risk levels and associated concerns:

Risk Levels & Examples

Risk Level | Use Cases | Key Concerns |

| Low | Internal LLM tools (e.g., summarizers) | Low exposure, minimal impact |

| Medium | Internal report generators using sensitive data | Moderate business risk, data handling required |

| High | Customer support bots, fraud detection, and credit models | Business-critical, external-facing, compliance |

2. AI Security Baseline Controls

To secure AI systems effectively, organizations must implement baseline controls tailored to AI’s unique risks. These span across access, data, and model layers.

The table below highlights key control categories along with example implementations that form a foundation for AI security:

Control Category | Examples |

| Access Control | RBAC on model endpoints, API gating |

| Logging & Monitoring | Model calls, input/output logging |

| Data Handling | Input validation, sensitive data scrubs |

| LLM Security | Prompt filtering, content moderation |

| Model Hardening | Adversarial testing, input fuzzing, threat intelligence |

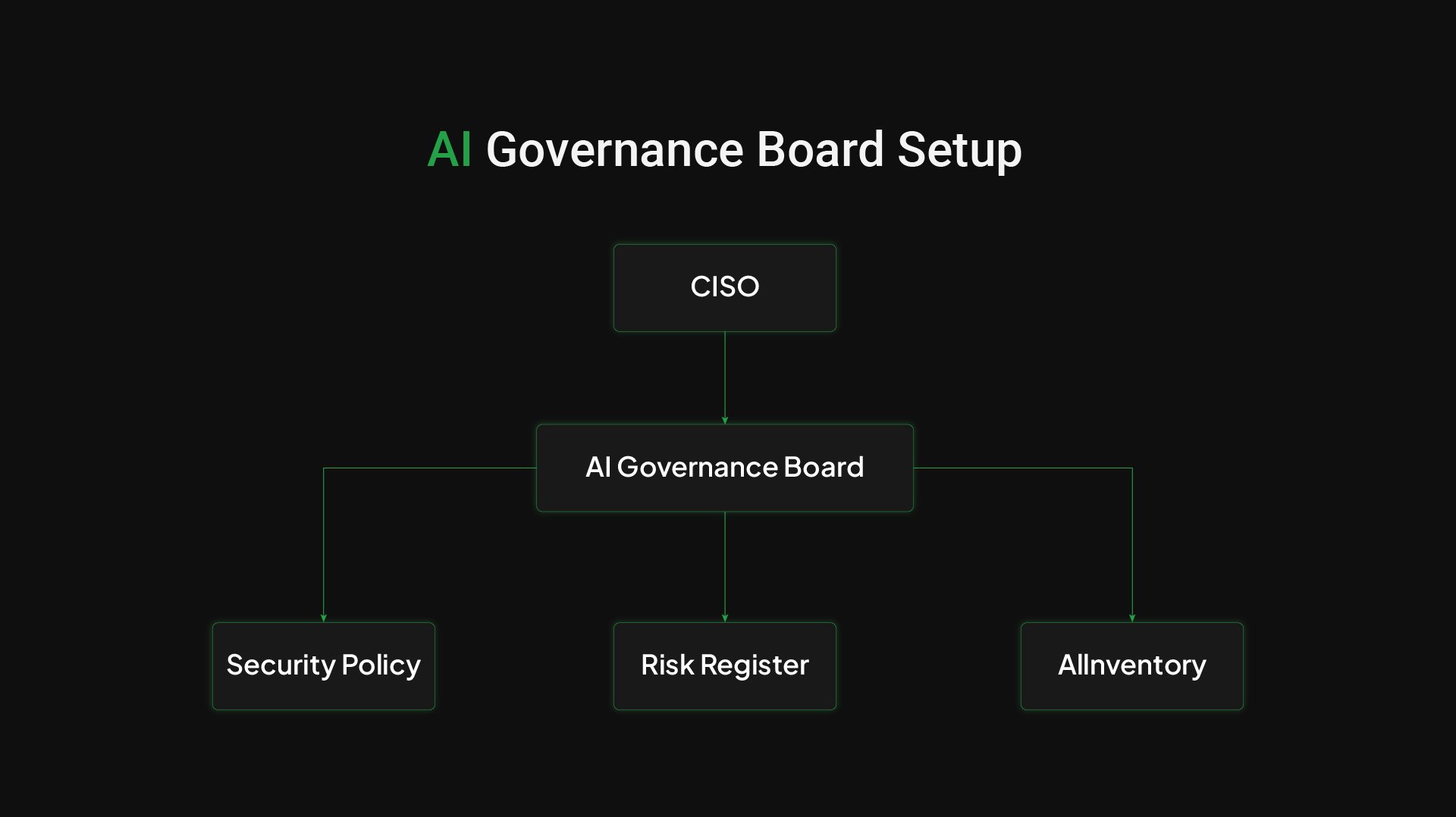

3. AI Governance Board Setup

To ensure accountable and balanced oversight of AI initiatives, organizations should establish a formal AI Governance Board. This cross-functional group enables efficient decision-making, enforces governance policies, and aligns AI efforts with business, legal, and risk requirements. A strong board typically includes:

- CISO (Chair) – Leads security and risk governance for AI systems.

- Chief Data/AI Officer – Drives AI strategy and ensures alignment with data policies.

- Legal & Compliance – Ensures adherence to regulatory, privacy, and ethical requirements.

- Engineering Leads – Provide technical insights and assess implementation feasibility.

- Risk Management – Identifies and tracks emerging AI-related risks across the organization.

4. Policy Frameworks

Establish clear policies and governance guidelines to ensure safe, compliant, and consistent use of AI across the organization. Focus areas include:

- Acceptable Use of AI & LLMs Across Departments

Define permissible use cases for AI tools—including LLMs—by different business units. Address responsible data usage, output review, copyright/IP concerns, and restrictions on public AI services.

- AI Procurement & Integration Reviews

Require formal review and approval of third-party AI tools before integration. Assess vendor practices, model transparency, data handling, and alignment with internal risk, legal, and compliance standards.

- Model Lifecycle SOPs (Training, Testing, Deployment)

Create standardized procedures for managing AI models through their lifecycle. Include processes for data selection, training validation, testing for robustness and bias, deployment criteria, and retirement or retraining triggers.

- AI Engineering Playbooks

Develop engineering best practices and secure development guidelines based on trusted frameworks like OWASP AI Security & Privacy Guide (AISVS) and NIST AI Risk Management Framework (AI RMF). These should cover secure coding, model evaluation, threat modeling, and ongoing risk monitoring.

5. Operationalizing Governance for AI

1. AI Inventory Management

Establish and maintain a dynamic, centralized inventory system that provides a comprehensive view of all AI-related assets. This includes:

- AI Models in Use: Track every deployed model across environments (development, testing, production), noting version, function, and status (active, deprecated, experimental).

- Linked Data Sources: Document all data sources feeding into each model—both training and inference-time data, including structured, unstructured, third-party, or proprietary data.

- Ownership and SLAs: Assign accountable owners (individuals or teams) for each model and data source, with clearly defined Service Level Agreements (SLAs) for performance, maintenance, and support. Include documentation on retraining cycles, monitoring requirements, and escalation processes.

2. Continuous Monitoring

Implement continuous oversight of your AI ecosystem using advanced security and observability tools:

- Use CNAPPs or Agentless Security Tools: Leverage Cloud-Native Application Protection Platforms (CNAPPs) or agentless monitoring solutions to reduce operational friction and maximize visibility.

- Scan AI Endpoints: Regularly inspect APIs, web interfaces, and model endpoints for vulnerabilities, misconfigurations, and exposure to unauthorized access.

- Detect Shadow AI Tools: Identify unauthorized or unsanctioned AI/ML tools and models that may be deployed by employees without governance oversight, similar to shadow IT.

- Monitor for Data Exfiltration: Continuously analyze data flows in and out of AI systems to detect anomalous behavior indicative of potential data leaks or misuse (e.g., sensitive data in model responses).

- Scan AI Endpoints: Regularly inspect APIs, web interfaces, and model endpoints for vulnerabilities, misconfigurations, and exposure to unauthorized access.

3. Pentest the AI (Adversarial Testing)

Conduct structured security testing that goes beyond traditional application testing to probe the unique vulnerabilities of AI systems:

- Prompt Injection Testing: Simulate malicious prompts intended to hijack or mislead large language models and other NLP-based systems to bypass controls or generate inappropriate output.

- Bias Exposure Analysis: Evaluate models for fairness by identifying and measuring bias in responses or predictions based on gender, race, socioeconomic status, etc.

- Model Inversion Attacks: Test if attackers can infer sensitive information about the training data by querying the model, especially relevant for models trained on private or regulated datasets.

- Other AI-Specific Test Cases:

- Membership Inference: Determine whether a given data point was used in training, which may violate data privacy guarantees.

- Output Leakage: Ensure the model does not unintentionally reveal sensitive or proprietary information in its outputs.

- Toxicity & Hallucination Checks: Validate that models do not produce harmful, offensive, or factually incorrect content under normal or edge-case use.

6. Metrics to Track

To effectively measure the maturity and enforcement of AI governance framework, it’s essential to track a set of actionable metrics. These indicators help assess adoption, coverage, testing rigor, and policy compliance across AI projects. The following table outlines key metrics to monitor and what each reveals about your AI security and governance posture:

Metric | Description |

| % AI projects with security review | Adoption of governance |

| Number of pentests on AI projects | Testing maturity |

| % coverage of AI Inventory | Visibility strength |

| AI risk exceptions approved | Governance enforcement gaps |

Final Thoughts

AI Governance is no longer optional; it’s a board-level concern. CISOs and security teams must shift from a reactive to a proactive security posture, embedding governance across AI systems. Mid-sized and enterprise organizations should start small but scale fast.

Start with inventory -> Add policy -> Drive risk-based controls -> Govern through metrics.

“The future of secure AI is not just about building smarter machines, it’s about building smarter rules around them.”