Security teams face constant pressure from an overload of alerts and findings. Every new scanner or assessment adds to the pile, making it hard to focus on what matters. Instead of streamlining efforts, these tools often create more confusion by repeating the same issues across reports. This is where vulnerability deduplication steps in as a quiet hero, cutting through the repetition to reveal the true state of risks.

- More findings do not always mean more risks; they often signal redundancy.

- Teams end up reacting to echoes rather than the root problems.

- This leads to inefficient use of time and resources.

Many organizations struggle because their security programs drown in duplicate entries. One vulnerability might show up five times from different tools, inflating the perceived workload without adding value. Vulnerability deduplication addresses this by merging identical or related findings into a single entry, ensuring teams deal with unique problems only. It does not hide risks; it sharpens the view.

This blog explores why vulnerability deduplication deserves more attention. We will break down its role in security operations, the damage caused by duplicates, and practical ways to implement it. By the end, you will see how this control can transform overwhelmed teams into focused ones, leading to better protection and efficiency.

1. What Deduplication Means in Security Operations

In security operations, vulnerability deduplication refers to the process of identifying and consolidating repeated vulnerability reports from various sources. Unlike data storage, where deduplication saves space, here it focuses on cleaning up assessment outputs to avoid redundant work.

- It targets reports from scans, tests, and audits.

- The process uses matching logic to link similar entries.

- Outcomes include a streamlined view of threats.

Duplicates arise for several reasons.

- Multiple scanners might detect the same weakness in an application or system. For example, one tool flags a misconfiguration in a web server, and another does the same during a separate scan, creating two entries for one issue.

- Findings repeat across environments, such as development, staging, and production, where the same code base carries the same flaws.

- Retests after fixes can generate fresh alerts if the underlying problem persists or if tools interpret results differently.

Vulnerability deduplication tackles these by using rules to match findings based on criteria like CVE identifiers, affected assets, or exploit details. It groups them without losing context, so teams see the full picture in one place. Importantly, this is not about erasing data or ignoring threats. It preserves all original reports as references while presenting a unified view, making it easier to track and resolve.

- Rules can include hash comparisons or attribute matching.

- Context retention ensures no information loss.

- Unified views speed up analysis.

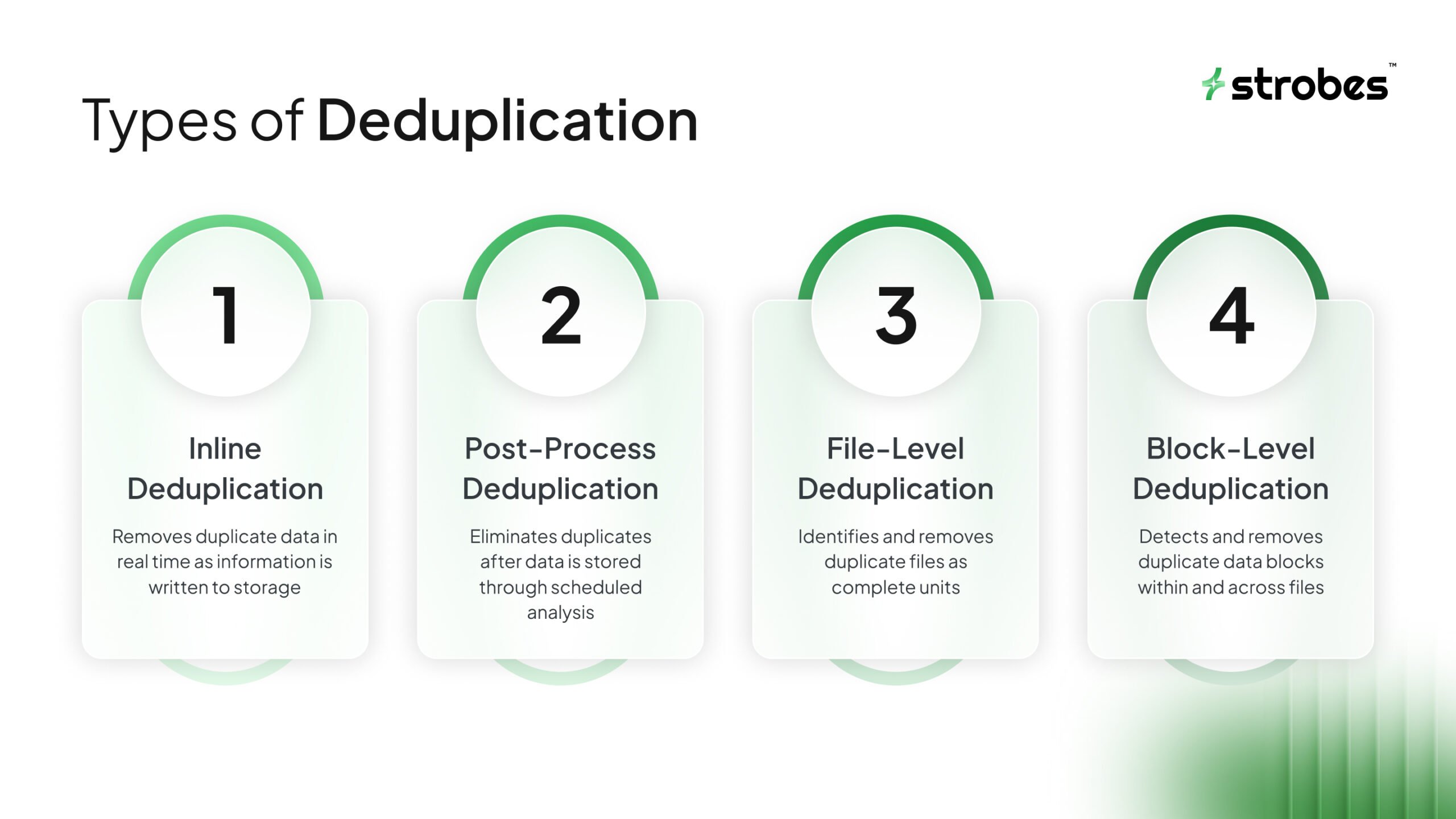

Types of Deduplication:

Deduplication is commonly described as a data efficiency technique, but its role in security is often missed. By collapsing repeated data into a single source of truth, deduplication limits unnecessary data spread and brings order to environments overloaded with copies of the same information. To understand why this matters for security outcomes, it helps to break deduplication into its core types.

Inline vs Post-Process Deduplication:

The main difference between these approaches is timing. Each affects performance, resource usage, and how quickly risk is reduced.

Inline Deduplication:

Inline deduplication works at the point of ingestion. As data enters a system, it is checked against existing data. If a match exists, only a reference is stored.

Why teams use it

- Prevents duplicate data from being written at all

- Keeps storage growth under control from day one

- Fits continuous data streams such as backups or telemetry

Operational tradeoffs

- Requires real-time processing

- Can add overhead if systems are not sized correctly

Security relevance

Inline deduplication limits how much data exists in the first place. Fewer copies mean fewer targets and clearer control over sensitive information, especially in environments with strict access enforcement.

Post-Process Deduplication:

Post-process deduplication runs after the data is written. Full copies are stored first, then consolidated later through scheduled jobs.

Why teams use it

- Keeps ingestion fast

- Works well with existing systems and historical data

- Allows resource-heavy processing during low-usage periods

Operational tradeoffs

- Duplicate data exists until cleanup completes

- Storage use and exposure remain higher during that window

Security relevance

This approach supports audit and investigation needs but relies heavily on strong interim safeguards. Until consolidation happens, risk is spread across more locations.

File-Level vs Block-Level Deduplication:

This distinction focuses on how precisely data is compared.

File-Level Deduplication

File-level deduplication treats each file as a single unit. If two files match exactly, one is retained and the rest reference it.

Strengths

- Simple and fast

- Works well for shared repositories and image libraries

Limitations

- Misses duplication inside files

- Less effective for large or frequently updated data

Security relevance

Reducing identical file copies limits the uncontrolled spread of sensitive content and simplifies audits.

Block-Level Deduplication

Block-level deduplication breaks data into smaller segments and compares those segments individually.

Strengths

- Detects repetition inside files

- Delivers higher consolidation rates

- Scales well for large data volumes

Limitations

- Higher processing and metadata overhead

- Requires careful integrity management

Security relevance

By shrinking total data volume more aggressively, block-level deduplication reduces what can be copied, leaked, or misused. Strong validation is essential to keep references accurate.

2. How Duplicate Findings Quietly Break Security Programs

Duplicate findings create hidden chaos in security programs. They inflate the backlog, turning a manageable list of 100 unique issues into 500 entries. Teams then spend hours sorting through repetitions instead of fixing problems, leading to delays in addressing actual risks.

This volume misleads teams into thinking high alert counts mean high exposure, when often it is just an echo from overlapping tools. The result? Prioritization suffers because everything looks urgent. Operational impacts are clear:

Remediation cycles stretch from days to weeks as engineers chase shadows. AppSec and infrastructure teams experience fatigue from constant false urgency, reducing their effectiveness over time.

Worse, duplicates erode trust in tools and reports. When dashboards show inflated numbers, stakeholders question the data’s reliability. This disconnects security from business goals, where the focus should be on reducing exposure, not managing noise. In the end, organizations miss opportunities to mitigate real threats because resources get tied up in redundancy.

- Trust loss affects tool adoption.

- Business alignment suffers from misreported risks.

- Missed mitigations increase breach potential.

3. Severity Inflation Starts With Duplication

Duplication does not just add volume; it amplifies perceived severity. A single medium-risk vulnerability reported multiple times can appear as a cluster of high-priority items, skewing the overall risk assessment.

- Repeated reports create false clusters.

- Medium risks look critical in aggregates.

- Assessments become unreliable.

Leadership reports suffer most from this. What looks like a widespread crisis might be one issue echoed across scans. This leads to misguided decisions, like allocating budget to non-issues. Prioritization breaks down when teams cannot distinguish between a true escalation and artificial inflation. One vulnerability showing up ten times might get bumped up in queues, while unique critical flaws wait.

The waste is evident in remediation efforts. Engineers fix the same problem repeatedly, thinking they are different, only to realize later it was a duplicate. Vulnerability deduplication prevents this by consolidating entries early, ensuring severity reflects reality. Without it, teams chase inflated threats, leaving genuine risks unaddressed.

4. Deduplication as a Control Not a Feature

Vulnerability deduplication should be treated as a core control in security frameworks, not an optional add-on in tools. It directly influences key outcomes like accurate risk posture by providing a clean slate for analysis.

- Frameworks benefit from built-in cleanup.

- Outcomes tie to program maturity.

- Clean slates enable better planning.

With deduplication, teams gain real progress tracking.

Instead of closing multiple tickets for one fix, they close one, showing clear advancement. Ownership assignment speeds up because a single entry means one responsible party, reducing handoffs and confusion.

Compare this to established controls like access reviews, which ensure only authorized users reach sensitive areas, or logging quality, which maintains reliable audit trails. Vulnerability deduplication fits similarly by ensuring input data for decisions is precise. It turns reactive firefighting into proactive management, making it essential for mature security programs.

5. Where Deduplication Fits in the Security Lifecycle

Vulnerability deduplication integrates across the security lifecycle to maintain consistency. It starts before prioritization by cleaning raw data from scans, ensuring only unique findings enter the queue.

- Pre-prioritization cleanup sets the stage.

- Unique entries simplify queues.

- Consistency aids all phases.

During triage, it simplifies assignment:

One issue means one owner, avoiding debates over which duplicate to handle first. This streamlines workflows, allowing faster evaluation of exploitability and impact.

After remediation, deduplication helps track closure accurately. Fixes apply to the consolidated entry, preventing reopenings from lingering duplicates. In reporting, it delivers honest metrics, showing actual reductions in open issues rather than superficial activity.

6. Manual Deduplication Fails at Scale

At small scales, teams might handle duplicates manually through spreadsheets or meetings, but this approach collapses in larger setups. As organizations grow, the volume overwhelms human efforts.

- Small scales tolerate manual work.

- Growth exposes limitations.

- Volume leads to breakdowns.

Challenges mount in environments with multiple business units, where each runs its own scans, creating silos of repeated findings.

A cloud and on-prem mix adds complexity, as tools interpret the same vulnerability differently across platforms. Continuous scanning exacerbates this, generating fresh duplicates daily.

The issue is not lack of skill but sheer scale. Manual processes lead to errors, like missing matches or inconsistent rules, resulting in incomplete consolidation. Automated vulnerability deduplication solves this by applying consistent logic across all data, handling thousands of findings without fatigue.

7. What Effective Deduplication Actually Looks Like

Effective vulnerability deduplication goes beyond simple matching. It groups findings by root cause, analyzing underlying factors like shared code vulnerabilities rather than surface-level scanner outputs.

- Root cause focus deepens accuracy.

- Analysis goes beyond outputs.

- Grouping reveals patterns.

Asset-aware correlation is key, linking issues to specific hosts, applications, or containers for precise grouping. Time-aware tracking prevents reintroducing old issues by considering closure dates and scan histories.

- Asset links ensure relevance.

- Time factors avoid cycles.

- Histories inform decisions.

Context-driven consolidation pulls in data from multiple tools, using attributes like severity scores or exploit paths to merge accurately. This results in scanner noise reduction, where irrelevant repetitions vanish, leaving a focused list. Vulnerability correlation enhances this by identifying related weaknesses, not just exact duplicates, for a more thorough cleanup.

8. Deduplication and Risk-Based Workflows

Clean data from vulnerability deduplication enables true risk-based workflows. Teams can rank issues based on business impact, exploit likelihood, and asset value without distortion from duplicates.

- Clean data supports ranking.

- Factors include impact and likelihood.

- Distortion-free views guide priorities.

This shift moves focus from quantity to quality:

- Fixing the right problems first.

- With fewer entries, remediation capacity stretches further, targeting high-exposure areas effectively.

Scanner noise reduction plays a big role here, as deduplication filters out echoes, allowing algorithms to score accurately. Vulnerability correlation ties in by grouping similar risks, revealing patterns that inform broader strategies. The outcome is a security program that aligns with organizational priorities, delivering measurable reductions in threats.

- Quality focus optimizes efforts.

- Capacity extension boosts coverage.

- Patterns inform strategies.

9. Why Deduplication Is Ignored in Most Tool Decisions

Tool selections often prioritize detection capabilities over operational efficiency, leaving vulnerability deduplication overlooked. Buyers chase advanced scanning features, assuming actionability comes later.

- Detection trumps efficiency in choices.

- Assumptions lead to gaps.

- Overlooks create imbalances.

This creates long-term debt:

Tools generate findings without built-in cleanup, leading to bloated backlogs. Vendors highlight discovery metrics, like vulnerabilities found per scan, but downplay the need for consolidation.

As a result, organizations end up with powerful detectors but weak processors. Recognizing vulnerability deduplication as a must-have shifts evaluations toward tools that support it natively, ensuring sustainable operations.

- Debt accumulates over time.

- Vendor emphasis misleads.

- Native support sustains programs.

10. Measuring the Impact of Deduplication

To prove value, track specific metrics post-implementation.

- Start with a reduction in open findings: a 30-50% drop is common as duplicates consolidate.

- Mean time to remediate improves, often halving as teams handle unique issues faster.

- Fewer reassigned tickets indicate better ownership clarity.

Reporting to leadership becomes clearer, with dashboards reflecting true progress. Scanner noise reduction shows in lower alert volumes, while vulnerability correlation metrics highlight grouped efficiencies. These indicators demonstrate how deduplication drives real improvements.

- Finding reductions quantify savings.

- Remediation times measure speed.

- Metrics showcase efficiencies.

Conclusion:

Security programs rarely struggle due to a lack of data. They struggle because the same issues appear repeatedly, creating noise that hides what actually needs action. Vulnerability deduplication corrects this by restoring clarity at the source. It ensures teams work on unique problems, not repeated signals, and that prioritization reflects real exposure.

When duplication dominates, severity loses meaning, backlogs lose credibility, and remediation effort drifts. Deduplication brings structure back into the workflow. Each finding represents actual risk, ownership becomes clear, and progress reflects real reduction, not activity.

As environments expand across applications, cloud, and infrastructure, manual cleanup cannot scale. Effective deduplication must be continuous, context-aware, and automated to keep pace with incoming findings.

If your backlog keeps growing while risk remains unchanged, the problem is not effort. It is structured. Start by fixing how findings are consolidated, not by adding another source of noise.

See how Strobes helps teams cut through duplication, regain control of prioritization, and turn vulnerability data into clear, actionable outcomes.